- #URL EXTRACTOR FROM MULTIPLE WEBSITE HOW TO#

- #URL EXTRACTOR FROM MULTIPLE WEBSITE INSTALL#

- #URL EXTRACTOR FROM MULTIPLE WEBSITE PORTABLE#

- #URL EXTRACTOR FROM MULTIPLE WEBSITE SOFTWARE#

Vovsoft URL Extractor supports file masks to help you filter the files.

All you need to do is select the files you want the application to analyze and press the "Start" button. All the options are clear and simple and they all can be placed within the one-window interface. Now we just need to open up the developer console and run the code. I’m using the Select Committee inquiries list from the 2017 Parliament page as an example it is a page with a massive amount of links that, as a grouping, may be useful to a lot of people.

#URL EXTRACTOR FROM MULTIPLE WEBSITE SOFTWARE#

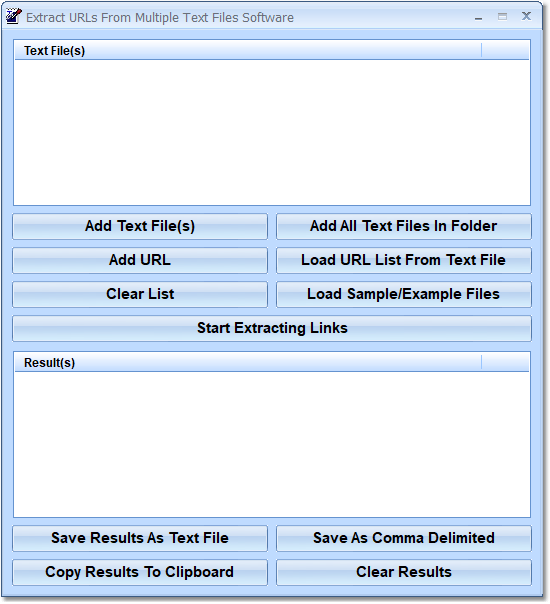

The software scans an entire folder for files that contain links and displays them all within its main window, allowing you to export the list to file. Select Committee inquiries from the 2017 Parliament. You only need to provide a directory, as the program can take care of the rest. Once installed, you can start the application and begin searching for links almost immediately. You can extract and recover all URLs from files in seconds. Vovsoft URL Extractor is one of the best programs that can harvest http and https web page addresses.

Fortunately Vovsoft URL Extractor can help you in this regard when you need a URL scraper software. It can be hard work to browse all the folders and scrape the web links. Sometimes we need to grab all URLs (Uniform Resource Locator) from files and folders.

#URL EXTRACTOR FROM MULTIPLE WEBSITE PORTABLE#

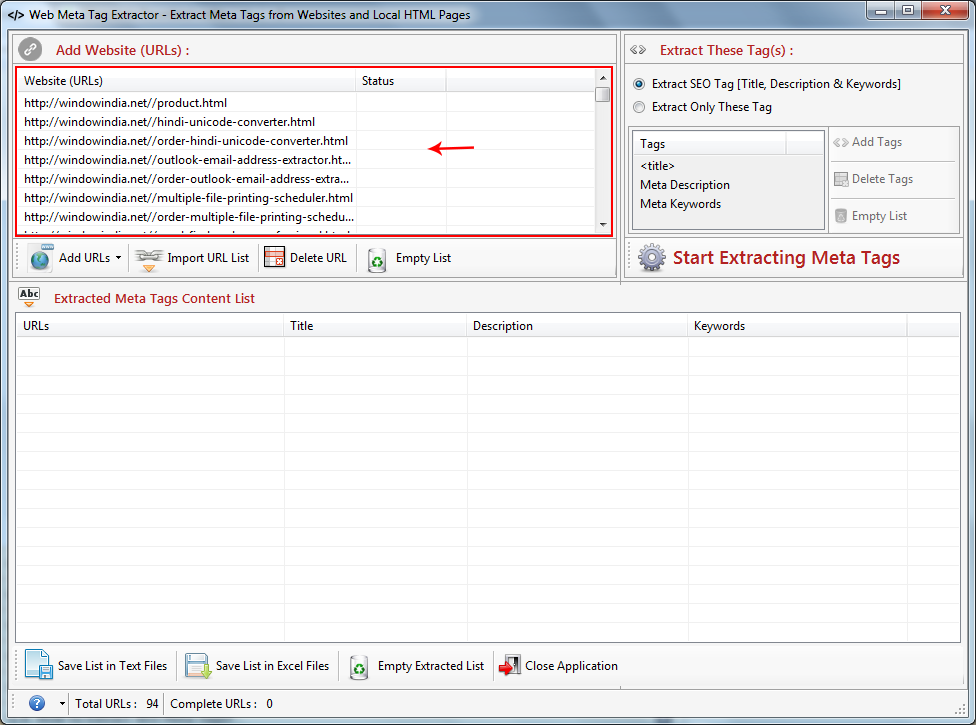

Give this tool a shot and use it for your specific purpose of analysis/evaluation.VovSoft URL Extractor 1.4 + Portable | 3.3/2.2 Mb Open all Ctrl+Enter Reset Ctrl+R Please give us your feedback or suggestion to improve this tool more and more, we are happy to help. It will open all the given URLs in just a click. It can start from keywords, search on search engines and cross navigate on the found web sites to extract urls and emails addresses. Enter urls, one per line or separated by commas or separated by space in the below text area. URL extractor allows the user to specify a list of web pages used as navigation starting point and going to other web pages using cross navigation. This is a helpful tool for businesses that are crawling the web for information. Start the URL extractor software and it will give you the output you requested. First you select the web pages you would like analyzed, then save them onto your hard drive. This process can be done on a home computer with URL extractor software. Additionally, you can program the URL extractor to ignore the URL’s you do not want. It is able to retrieve every valid URL and all HTML files, and then create an output file that contains no duplications. A URL extractor extracts URL’s from websites.

#URL EXTRACTOR FROM MULTIPLE WEBSITE HOW TO#

This tip shows how to extract URLs from all of these. While and elements are primary sources of URLs, there are more than 70 element attributes with URLs in HTML, XHTML, WML, and assorted HTML extensions. This module also does not comes built-in with Python. requests: Requests allows you to send HTTP/1.1 requests extremely easily.

#URL EXTRACTOR FROM MULTIPLE WEBSITE INSTALL#

To install this type the below command in the terminal. This module does not come built-in with Python. URL extraction is at the core of link checkers, search engine spiders, and a variety of web page analysis tools. Module Needed: bs4: Beautiful Soup(bs4) is a Python library for pulling data out of HTML and XML files. In such a case, it gets complicated to assimilate the webpages of the website – doesn’t it? There is just a right tool available at SiteOpSys to help you with this. an e-commerce website, has multiple webpages that are generated dynamically based on the request/query passed. You all know that dynamic websites, for e.g.

0 kommentar(er)

0 kommentar(er)